41

In-depth analysis by standard academic criteria shows that MOOCs have more academic rigor and are a far more effective teaching methodology than in-house teaching

Benton R. Groves, Ph.D. student

My big concern with xMOOCs is their limitation, as currently designed, for developing the higher order intellectual skills needed in a digital world.

Tony Bates

5.4.1 The research on MOOCs

A lot of the research to date on MOOCs comes from the institutions offering MOOCs, mainly in the form of reports on enrolments, or self-evaluation by instructors. The commercial platform providers such as Coursera and Udacity have provided limited research information overall, which is a pity, because they have access to really big data sets. However, MIT and Harvard, the founding partners in edX, have conducted some research, mainly on their own courses.

In this chapter, I have drawn on available evidence-based research that provides insight into the strengths and weaknesses of MOOCs. At the same time, we should be clear that we are discussing a phenomenon that to date has been marked largely by political, emotional and often irrational discourse, rather than something based on evidence-based research.

Lastly, it should be remembered in this evaluation I am applying the criteria of whether MOOCs are likely to lead to the kinds of learning needed in a digital age: in other words, do they help develop the knowledge and skills defined in Chapter 1?

5.4.2 Open and free education

5.4.2.1 The ‘open-ness’ of MOOCs

MOOCs, particularly xMOOCs, deliver high quality content from some of the world’s best universities to anyone with a computer and an Internet connection. This in itself is an amazing value proposition. In this sense, MOOCs are an incredibly valuable addition to education. Who could argue against this?

However, MOOCs are not the only form of open and free education. Libraries, open textbooks and educational broadcasting are also open and free and have been for some time. There are also lessons we can learn from these earlier forms of open and free education that also apply to MOOCs.

Furthermore, MOOCs are not always open as in the sense of open educational resources. Coursera and Udacity for instance offer limited access to their material for re-use without permission. On other more open platforms, such as edX, individual faculty or institutions may restrict re-use of material. Lastly, many MOOCs exist for only one or two years then disappear, which limits their use as open educational resources for re-use in other courses or programs.

5.4.2.2 A replacement for conventional education?

It is worth noting that these earlier forms of open and free education did not replace the need for formal, credit-based education, but were used to supplement or strengthen it. In other words, MOOCs are a tool for continuing and informal education, which has high value in its own right. As we shall see, though, MOOCs work best when people are already reasonably well educated. There is no reason to believe then that because MOOCs are open and free to end-users, they will inevitably force down the cost of conventional higher education, eliminate the need for it altogether, or bring effective higher education to the masses.

5.4.2.3 The answer for education in developing countries?

There have been many attempts to use educational broadcasting and satellite broadcasting in developing countries to open up education for the masses (see Bates, 1984), and they all substantially failed to increase access or reduce cost for a variety of reasons, the most important being:

- the high cost of ground equipment (including security from theft or damage);

- the need for local face-to-face support for learners without high levels of education;

- the need to adapt content to the culture and needs of the receiving countries;

- the difficulty of covering the operational costs of management and administration, especially for assessment, qualifications and local accreditation.

Also the priority in most developing countries is not for university courses from high-level Stanford University professors, but for low cost, good quality high school education.

Although mobile phones and to a lesser extent tablets are widespread in Africa, they are relatively expensive to use. For instance, it costs US$2 to download a typical YouTube video – equivalent to a day’s salary for many Africans. Streamed 50 minute video lectures then have limited applicability.

Lastly, it is frankly immoral to allow people in developing countries to believe that successful completion of MOOCs will lead to a recognised degree or to university entrance in the USA or in any other economically advanced country, at least under present circumstances.

This is not to say that MOOCs could not be valuable in developing countries, but this will mean:

- being realistic as to what they can actually deliver;

- developing locally produced MOOCs that are recognised by and integrated with existing educational systems, such as India’s SWAYAM MOOCs

- ensuring that the necessary student support – which costs real money – is put in place;

- adapting the design, content and delivery of MOOCs to the cultural and economic requirements of those countries.

Finally, although MOOCs are in the main free for participants, they are not without substantial cost to MOOC providers, an issue that will be discussed in more detail in Section 5.4.8.

5.4.3 The audience that MOOCs mainly serve

In a research report from Ho et al. (2014), researchers at Harvard University and MIT found that on the first 17 MOOCs offered through edX,

- 66 per cent of all participants, and 74 per cent of all who obtained a certificate, have a bachelor’s degree or above,

- 71 per cent were male, and the average age was 26.

- this and other studies also found that a high proportion of participants came from outside the USA, ranging from 40-60 per cent of all participants, indicating strong interest internationally in open access to high quality university teaching.

In a study based on over 80 interviews in 62 institutions ‘active in the MOOC space’, Hollands and Tirthali (2014), researchers at Columbia University Teachers’ College, found that:

Data from MOOC platforms indicate that MOOCs are providing educational opportunities to millions of individuals across the world. However, most MOOC participants are already well-educated and employed, and only a small fraction of them fully engages with the courses. Overall, the evidence suggests that MOOCs are currently falling far short of “democratizing” education and may, for now, be doing more to increase gaps in access to education than to diminish them.

Thus MOOCs, as is common with most forms of university continuing education, cater to the better educated, older and employed sectors of society.

5.4.4 Persistence and commitment: the onion hypothesis

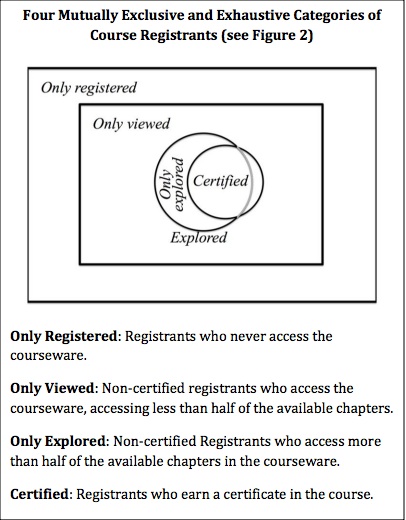

The edX researchers (Ho et al., 2014) identified different levels of commitment as follows across 17 edX MOOCs:

- only registered: registrants who never access the courseware (35 per cent);

- only viewed: non-certified registrants who access the courseware, accessing less than half of the available chapters (56 per cent);

- only explored: non-certified registrants who access more than half of the available chapters in the courseware, but did not get a certificate (4 per cent);

- certified: registrants who earn a certificate in the course (5 per cent).

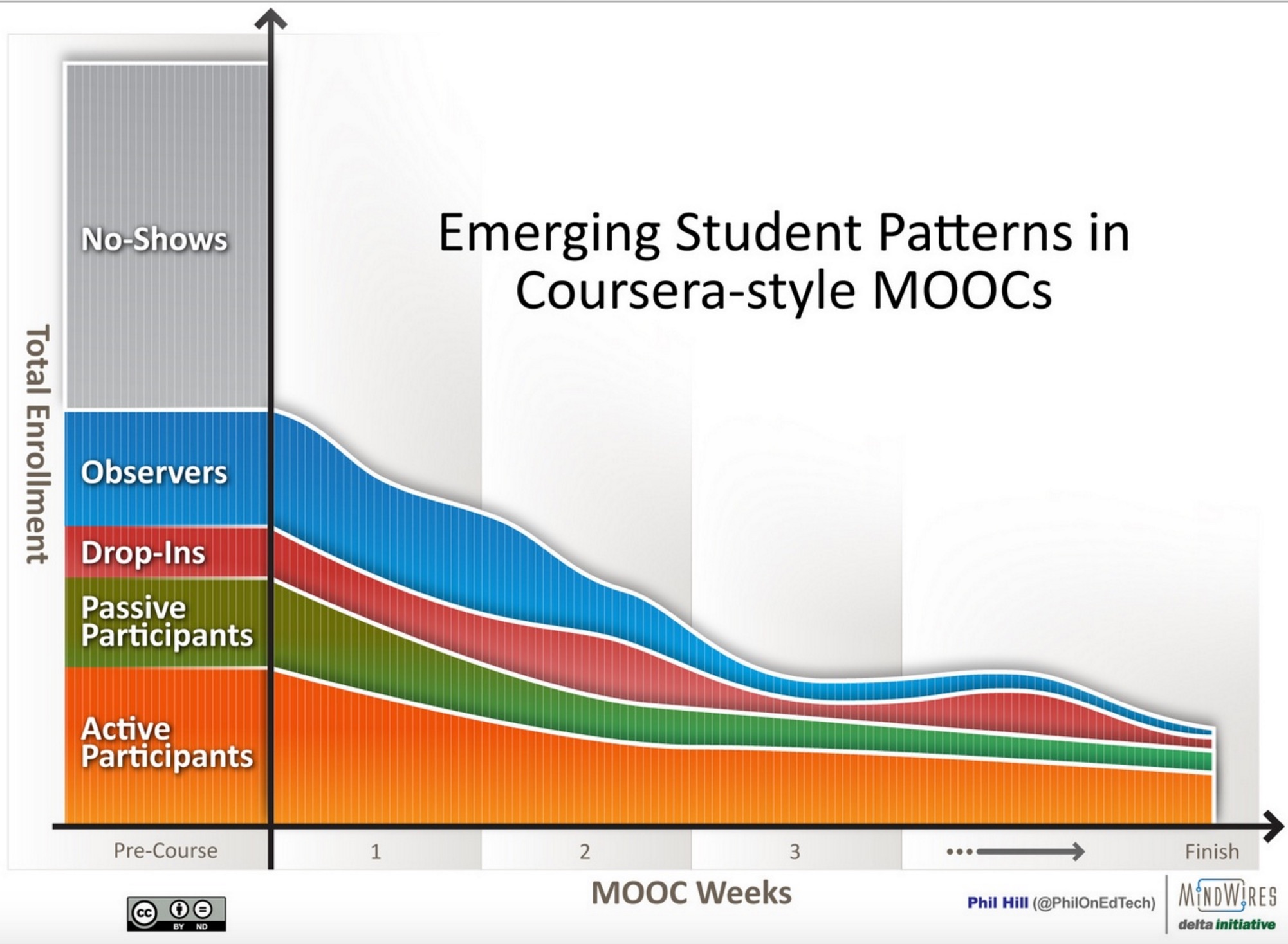

Hill (2013) has identified five types of participants in Coursera courses:

Engle (2014) found similar patterns (also replicated in other studies) for the University of British Columbia MOOCs on Coursera :

- of those that initially sign up, between one third and a half do not participate in any other active way;

- of those that participate in at least one activity, between 5-10 per cent go on to successfully complete a certificate.

Those going on to achieve certificates usually are within the 5-10 per cent range of those that sign up and in the 10-20 per cent range for those who actively engaged with the MOOC at least once. Nevertheless, the numbers obtaining certificates are still large in absolute terms: over 43,000 across 17 courses on edX and 8,000 across four courses at UBC (between 2,000-2,500 certificates per course).

Milligan et al. (2013) found a similar pattern of commitment in cMOOCs, from interviewing a small sample of participants (29 out of 2,300 registrants) about halfway through a cMOOC:

- passive participants: in Milligan’s study these were those that felt lost in the MOOC and rarely but occasionally logged in;

- lurkers: they were actively following the course but did not engage in any of the activities (just under half those interviewed);

- active participants (again, just under half those interviewed) who were fully engaged in the course activities.

Reich and Ruipérez-Valiente (2019) in their study of edX MOOCs reported that:

..after promising a reordering of higher education, we see the field instead coalescing around a different, much older business model: helping universities outsource their online master’s degrees for professionals. The vast majority of MOOC learners never return after their first year, the growth in MOOC participation has been concentrated almost entirely in the world’s most affluent countries, and the bane of MOOCs—low completion rates—has not improved over 6 years.

MOOCs need to be judged for what they are, a somewhat unique – and valuable – form of non-formal education. These results are very similar to research into non-formal educational broadcasts (e.g. the History Channel). One would not expect a viewer to watch every episode of a History Channel series then take an exam at the end. Ho et al. (p.13) produced the following diagram to show the different levels of commitment to xMOOCs:

This is remarkably similar to my onion hypothesis of educational broadcasting in Britain (Bates, 1984):

(p.99): At the centre of the onion is a small core of fully committed students who work through the whole course, and, where available, take an end-of-course assessment or examination. Around the small core will be a rather larger layer of students who do not take any examination but do enrol with a local class or correspondence school. There may be an even larger layer of students who, as well as watching and listening, also buy the accompanying textbook, but who do not enrol in any courses. Then, by far the largest group, are those that just watch or listen to the programmes. Even within this last group, there will be considerable variations, from those who watch or listen fairly regularly, to those, again a much larger number, who watch or listen to just one programme.

I also wrote (p.100):

A sceptic may say that the only ones who can be said to have learned effectively are the tiny minority that worked right through the course and successfully took the final assessment…A counter argument would be that broadcasting can be considered successful if it merely attracts viewers or listeners who might otherwise have shown no interest in the topic; it is the numbers exposed to the material that matter…the key issue then is whether broadcasting does attract to education those who would not otherwise have been interested, or merely provides yet another opportunity for those who are already well educated…There is a good deal of evidence that it is still the better educated in Britain and Europe that make the most use of non-formal educational broadcasting.

Exactly the same could be said about MOOCs. In a digital age where easy and open access to new knowledge is critical for those working in knowledge-based industries, MOOCs will be one valuable source or means of accessing that knowledge. The issue is though whether there are more effective ways to do this. Thus MOOCs can be considered a useful – but not really revolutionary – contribution to non-formal continuing education.

5.4.5 What do students learn in MOOCs?

This is a much more difficult question to answer, because so little of the research to date (2022) has tried to answer this question. (One reason, as we shall see in the next section, is that assessment of learning in MOOCs remains a major challenge). There are at least two kinds of study: quantitative studies that seek to quantify learning gains; and qualitative studies that describe the experience of learners within MOOCs, which indirectly provide some insight into what they have learned.

5.4.5.1 Conceptual learning

At the time of writing, the most quantitative study of learning in MOOCs has been by Colvin et al.(2014), who investigated ‘conceptual learning’ in an MIT Introductory Physics MOOC. Colvin and colleagues compared learner performance not only between different sub-categories of learners within the xMOOC, such as those with no physics or math background with those such as physic teachers who had considerable prior knowledge, but also with on-campus students taking the same curriculum in a traditional campus teaching format. In essence, the study found no significant differences in learning gains between or within the two types of teaching, but it should be noted that the on-campus students were students who had failed an earlier version of the course and were retaking it.

This research is a classic example of the no significant difference in comparative studies in educational technology; other variables, such as differences in the types of students, were as important as the mode of delivery (for more on the ‘no significant difference’ phenomenon in media comparisons, see Chapter 10, Section 2.2). Also, this xMOOC design represents a behaviourist-cognitivist approach to learning that places heavy emphasis on correct answers to conceptual questions. It doesn’t attempt to develop the skills needed in a digital age as identified in Chapter 1.

5.4.5.2 The student experience

There have been far more studies of the experience of learners within MOOCs, particularly focusing on the discussions within MOOCs (see for instance, Kop, 2011). In general (although there are exceptions), discussions are unmonitored, and it is left to participants to make connections and respond to other students comments.

However, there are some strong criticisms of the effectiveness of the discussion element of xMOOCs for developing the high-level conceptual analysis required for academic learning. There is evidence from studies of credit-based online learning that to develop deep, conceptual learning, there is a need in most cases for intervention by a subject expert to clarify misunderstandings or misconceptions, to provide accurate feedback, to ensure that the criteria for academic learning, such as use of evidence, clarity of argument, and so on, are being met, and to ensure the necessary input and guidance to seek deeper understanding (see in particular Harasim, 2017).

Furthermore, the more massive the course, the more likely participants are to feel ‘overload, anxiety and a sense of loss’, if there is not some instructor intervention or structure imposed (Knox, 2014). Firmin et al. (2014) have shown that when there is some form of instructor ‘encouragement and support of student effort and engagement’, results improve for all participants in MOOCs. Without a structured role for subject experts, participants are faced with a wide variety of quality in terms of comments and feedback from other participants. There is again a great deal of research on the conditions necessary for the successful conduct of collaborative and co-operative group learning (see for instance, Lave and Wenger, 1991, or Barkley, Major and Cross, 2014), and these findings certainly have not been generally applied to the management of MOOC discussions.

5.4.5.3 Networked and collaborative learning

One counter argument is that cMOOCs in particular develop a new form of learning based on networking and collaboration that is essentially different from academic learning, and cMOOCs are thus more appropriate to the needs of learners in a digital age. Adult participants in particular, it is claimed by Downes and Siemens, have the ability to self-manage the development of high level conceptual learning. cMOOCs are ‘demand’ driven, meeting the interests of individual students who seek out others with similar interests and the necessary expertise to support them in their learning, and for many this interest may well not include the need for deep, conceptual learning but more likely the appropriate applications of prior knowledge in new or specific contexts. All MOOCs do appear to work best for those who already have a high level of education and therefore bring many of the conceptual skills developed in formal education with them when they join a MOOC, and therefore contribute to helping those who come without such prior knowledge or skills.

5.4.5.4 The need for learner support

Over time, as more experience is gained, MOOCs are likely to incorporate and adapt some of the findings from research on smaller group work to the much larger numbers in MOOCs. For instance, some MOOCs are using ‘volunteer’ or community tutors. The US State Department organized MOOC camps through US missions and consulates abroad to mentor MOOC participants. The camps included Fulbright scholars and embassy staff who lead discussions on content and topics for MOOC participants in countries abroad (Haynie, 2014).

Some MOOC providers, such as the University of British Columbia, paid a small cohort of academic assistants to monitor and contribute to the MOOC discussion forums (Engle, 2014). Engle reported that the use of academic assistants, as well as limited but effective interventions from the instructors themselves, made the UBC MOOCs more interactive and engaging.

However, paying for people to monitor and support MOOCs will of course increase the cost to providers. Consequently, MOOCs are likely to develop new automated ways to manage discussion effectively in very large groups. For instance, the University of Edinburgh experimented with an automated ‘teacherbot’ that crawled through student and instructor Twitter posts associated with a MOOC and directed predetermined comments to students to prompt discussion and reflection (Bayne, 2015). These results and approaches are consistent with prior research on the importance of instructor presence for successful online learning in credit-based courses (see Chapter 4.4.3).

In the meantime, though, there is much work still to be done if MOOCs are to provide the support and structure needed to ensure deep, conceptual learning where this does not already exist in students. The development of the skills needed in a digital age is likely to be an even greater challenge when dealing with massive numbers. We need much more research into what participants actually learn in MOOCs and under what conditions before any firm conclusions can be drawn.

5.4.6 Assessment

Assessment of the massive numbers of participants in MOOCs has proved to be a major challenge. It is a complex topic that can be dealt with only briefly here. However, Chapter 6, Section 8 provides a general analysis of different types of assessment, and Suen (2014) provides a comprehensive and balanced overview of the way assessment has been used in MOOCs. This section draws heavily on Suen’s paper.

5.4.6.1 Computer marked assignments

Assessment to date in MOOCs has been primarily of two kinds. The first is based on quantitative multiple-choice tests, or response boxes where formulae or ‘correct code’ can be entered and automatically checked. Usually participants are given immediate automated feedback on their answers, ranging from simple right or wrong answers to more complex responses depending on the type of response checked, but in all cases, the process is usually fully automated.

For straight testing of facts, principles, formulae, equations and other forms of conceptual learning where there are clear, correct answers, this works well. In fact, multiple choice computer marked assignments were used by the UK Open University as long ago as the 1970s, although the means to give immediate online feedback were not available then. However, this method of assessment is limited for testing deep or ‘transformative’ learning, and particularly weak for assessing the intellectual skills needed in a digital age, such as creative or original thinking.

5.4.6.2 Peer assessment

Another type of assessment that has been tried in MOOCs has been peer assessment, where participants assess each other’s work. Peer assessment is not new. It has been successfully used for formative assessment in traditional classrooms and in some online teaching for credit (Falchikov and Goldfinch, 2000; van Zundert et al., 2010). More importantly, peer assessment is seen as a powerful way to improve deep understanding and knowledge through the process of students evaluating the work of others, and at the same time, it can be useful for developing some of the skills needed in a digital age, such as critical thinking, for those participants assessing other participants.

However, a key feature of the successful use of peer assessment has been the close involvement of an instructor or teacher, in providing benchmarks, rubrics or criteria for assessment, and for monitoring and adjusting peer assessments to ensure consistency and a match with the benchmarks set by the instructor. Although an instructor can provide the benchmarks and rubrics in MOOCs, close monitoring of the multiple peer assessments is difficult if not impossible with the very large numbers of participants. As a result, MOOC participants often become incensed at being randomly assessed by other participants who may not and often do not have the knowledge or ability to give a ‘fair’ or accurate assessment of another participant’s work.

Various attempts to get round the limitations of peer assessment in MOOCs have been tried such as calibrated peer reviews, based on averaging all the peer ratings, and Bayesian post hoc stabilization (Piech at al. 2013), but although these statistical techniques reduce the error (or spread) of peer review somewhat they still do not remove the problems of systematic errors of judgement in raters due to misconceptions. This is particularly a problem where a majority of participants fail to understand key concepts in a MOOC, in which case peer assessment becomes the blind leading the blind.

5.4.6.3 Automated essay scoring

This is another area where there have been attempts to automate scoring (Balfour, 2013). Although such methods are increasingly sophisticated they are currently limited in terms of accurate assessment to measuring primarily technical writing skills, such as grammar, spelling and sentence construction. Once again they do not yet measure accurately longer essays where higher level intellectual skills are demanded.

5.4.6.4 Badges, certificates and microcredentials

Particularly in xMOOCs, participants may be awarded a certificate or a ‘badge’ for successful completion of the MOOC, based on a final test (usually computer-marked) which measures the level of learning in a course. However, most of the institutions offering MOOCs will not accept their own certificates for admission or credit within their own, campus-based programs. Probably nothing says more about the confidence in the quality of the assessment than this failure of MOOC providers to recognize their own teaching.

MOOC-based microcredentials are a more recent development.A microcredential is any one of a number of new certifications that covers more than a single course but is less than a full degree. Pickard (2018) provides an analysis of more than 450 MOOC-based microcredentials. Pickard states:

Microcredentials can be seen as part of a trend toward modularity and stackability in higher education, the idea being that each little piece of an education can be consumed on its own or can be aggregated with other pieces up to something larger. Each course is made of units, each unit is made of lessons; courses can stack up to Specializations or XSeries; these can stack up to partial degrees such as MicroMasters, or all the way up to full degrees (though only some microcredentials are structured as pieces of degrees).

However, in her analysis, Pickard found that in the micro-credentials offered through the main MOOC platforms, such as Coursera, edX, Udacity and FutureLearn.;

- student fees range from US$250 to US$17,000;

- some microcredentials, though not all, offer some opportunity to earn credit towards a degree program. Typically, university credit is awarded if and only if a student goes on to enroll in the particular degree program connected with the microcredential;

- they are not accredited, recognized, or evaluated by third party organizations (except insofar as they pertain to university degree programs). This variability and lack of standardization poses a problem for both learners and employers, as it makes it difficult to compare the various microcredentials;

- with so much variability, how would a prospective learner choose among the various options? Furthermore, without a detailed understanding of these options, how would an employer interpret or compare these microcredentials when they come up on a resume?

Nevertheless, in a digital age, both workers and employers will increasingly look for ways to ‘accredit’ smaller units of learning than a degree, but in ways that they can be stacked towards eventually a full degree. The issue is whether tying this to the MOOC movement is the best way to go.

Surely a better way would be to develop microcredentials as part of or in parallel with a regular online masters program. For instance as early as 2003, the University of British Columbia in its online Master of Educational Technology was allowing students to take single courses at a time, or the five foundation courses for a post-graduate certificate, or add four more courses and a project to the certificate for a full Master’s degree. Such microcredentials would not be MOOCs, unless (a) they are open to anyone and (b) they are free or at such a low cost anyone can take them. Then the issue becomes whether the institution will accept such MOOC-like credentials as part of a full degree. If not, employers are unlikely to recognise such microcredentials, because they will not know what they are worth.

5.4.6.5 The intent behind assessment

To evaluate assessment in MOOCs requires an examination of the intent behind assessment. There are many different purposes behind assessment (see Chapter 6, Section 8). Peer assessment and immediate feedback on computer-marked tests can be extremely valuable for formative assessment or feedback, enabling participants to see what they have understood and to help develop further their understanding of key concepts. In cMOOCs, as Suen points out, learning is measured as the communication that takes place between MOOC participants, resulting in crowdsourced validation of knowledge – it’s what the sum of all the participants come to believe to be true as a result of participating in the MOOC, so formal assessment is unnecessary. However, what is learned in this way is not necessarily academically validated knowledge, which to be fair, is not the concern of cMOOC proponents.

Academic assessment is a form of currency, related not only to measuring student achievement but also affecting student mobility (for example, entrance to graduate school) and perhaps more importantly employment opportunities and promotion. From a learner’s perspective, the validity of the currency – the recognition and transferability of the qualification – is essential. To date, MOOCs have been unable to demonstrate that they are able to assess accurately the learning achievements of participants beyond comprehension and knowledge of ideas, principles and processes (recognizing that there is some value in this alone). What MOOCs have not been able to demonstrate is that they can either develop or assess deep understanding or the intellectual skills required in a digital age. Indeed, this may not be possible within the constraints of massiveness, which is their major distinguishing feature from other forms of online learning.

5.4.7 Branding

Hollands and Tirthali (2014) in their survey on institutional expectations for MOOCs, found that building and maintaining brand was the second most important reason for institutions launching MOOCs (the most important was extending reach, which can also be seen as partly a branding exercise). Institutional branding through the use of MOOCs has been helped by elite Ivy League universities such as Stanford, MIT and Harvard leading the charge, and by Coursera initially limiting access to its platform to only ‘top tier’ universities. This of course has led to a bandwagon effect, especially since many of the universities launching MOOCs had previously disdained to move into credit-based online learning. MOOCs provided a way for these elite institutions to jump to the head of the queue in terms of status as ‘innovators’ of online learning, even though they arrived late to the party.

It obviously makes sense for institutions to use MOOCs to bring their areas of specialist expertise to a much wider public, such as the University of Alberta offering a MOOC on dinosaurs, MIT on electronics, and Harvard on Ancient Greek Heroes. MOOCs certainly help to widen knowledge of the quality of an individual professor (who is usually delighted to reach more students in one MOOC than in a lifetime of on-campus teaching). MOOCs are also a good way to give a glimpse of the quality of courses and programs offered by an institution.

However, it is difficult to measure the real impact of MOOCs on branding. As Hollands and Tirthali put it:

While many institutions have received significant media attention as a result of their MOOC activities, isolating and measuring impact of any new initiative on brand is a difficult exercise. Most institutions are only just beginning to think about how to capture and quantify branding-related benefits.

In particular, these elite institutions do not need MOOCs to boost the number of applicants for their campus-based programs (none to date is willing to accept successful completion of a MOOC for admission to credit programs), since elite institutions have no difficulty in attracting already highly qualified students.

Furthermore, once every other institution starts offering MOOCs, the branding effect gets lost to some extent. Indeed, exposing poor quality teaching or course planning to many thousands can have a negative impact on an institution’s brand, as Georgia Institute of Technology found when one of its MOOCs crashed and burned (Jaschik, 2013). However, by and large, most MOOCs succeed in the sense of bringing an institution’s reputation in terms of knowledge and expertise to many more people than it would through any other form of teaching or publicity.

5.4.8 Costs and economies of scale

One main strength claimed for MOOCs is that they are free to participants. Once again this is more true in principle than in practice, because MOOC providers may charge a range of fees, especially for assessment. Furthermore, although MOOCs may be free for participants, they are not without substantial cost to the provider institutions. Also, there are large differences in the costs of xMOOCs and cMOOCs, the latter being generally much cheaper to develop, although there are still some opportunity or actual costs even for cMOOCs.

5.4.8.1 The cost of MOOC production and delivery

There is still very little information to date on the actual costs of designing and delivering a MOOC as there are not enough published studies to draw firm conclusions about the costs of MOOCs. However we do have some data. The University of Ottawa (2013) estimated the cost of developing an xMOOC, based on figures provided to the university by Coursera, and on their own knowledge of the cost of developing online courses for credit, at around $100,000.

Engle (2014) has reported on the actual cost of five MOOCs from the University of British Columbia. There are two important features concerning the UBC MOOCs that do not necessarily apply to other MOOCs. First, the UBC MOOCs used a wide variety of video production methods, from full studio production to desktop recording, so development costs varied considerably, depending on the sophistication of the video production technique. Second, the UBC MOOCs made extensive use of paid academic assistants, who monitored discussions and adapted or changed course materials as a result of student feedback, so there were substantial delivery costs as well.

Appendix B of the UBC report gives a pilot total of $217,657, but this excludes academic assistance or, perhaps the most significant cost, instructor time. Academic assistance came to 25 per cent of the overall cost in the first year (excluding the cost of faculty). Working from the video production costs ($95,350) and the proportion of costs (44 per cent) devoted to video production in Figure 1 in the report, I estimate the direct cost at $216,700, or approximately $54,000 per MOOC, excluding faculty time and co-ordination support (that is, excluding program administration and overheads), but including academic assistance. However, the range of cost is almost as important. The video production costs for the MOOC which used intensive studio production were more than six times the video production costs of one of the other MOOCs.

5.4.8.2 The comparative costs of credit-based online courses

The main cost factors or variables in credit-based online and distance learning are relatively well understood, from previous research by Rumble (2001) and Hülsmann (2003). Using a similar costing methodology, I tracked and analysed the cost of an online master’s program at the University of British Columbia over a seven year period (Bates and Sangrà, 2011). This program used mainly a learning management system as the core technology, with instructors both developing the course and providing online learner support and assessment, assisted where necessary by extra adjunct faculty for handling larger class enrolments.

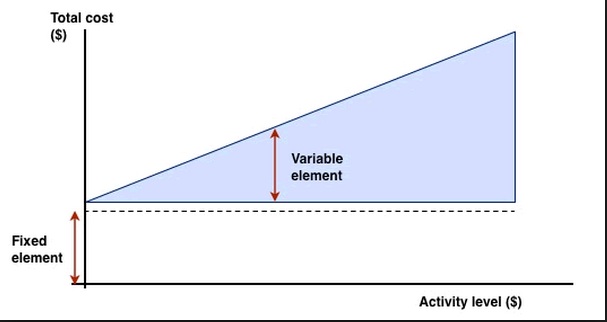

I found in my analysis of the costs of the UBC program that in 2003, development costs were approximately $20,000 to $25,000 per course. However, over a seven year period, course development constituted less than 15 per cent of the total cost, and occurred mainly in the first year or so of the program. Delivery costs, which included providing online learner support and student assessment, constituted more than a third of the total cost, and of course continued each year the course was offered. Thus in credit-based online learning, delivery costs tend to be more than double the development costs over the life of a program.

The main difference then between MOOCs, credit-based online teaching, and campus-based teaching is that in principle MOOCs eliminate all delivery costs, because MOOCs do not provide learner support or instructor-delivered assessment, although again in practice this is not always true.

5.4.8.3 Opportunity costs

There is also clearly a large opportunity cost involved in offering xMOOCs. By definition, the most highly valued faculty are involved in offering MOOCs. In a large research university, such faculty are likely to have, at a maximum, a teaching load of four to six courses a year. Although most instructors volunteer to do MOOCs, their time is limited. Either it means dropping one credit course for at least one semester, equivalent to 25 per cent or more of their teaching load, or xMOOC development and delivery replaces time spent doing research. Furthermore, unlike credit-based courses, which run from anywhere between five to seven years, MOOCs are often offered only once or twice.

5.4.8.4 Comparing the cost of MOOCs with online credit courses

However one looks at it, the cost of xMOOC development, without including the time of the MOOC instructor, tends to be almost double the cost of developing an online credit course using a learning management system, because of the use of video in MOOCs. If the cost of the instructor is included, xMOOC production costs come closer to three times that of a similar length online credit course, especially given the extra time faculty tend put in for such a public demonstration of their teaching in a MOOC. xMOOCs could (and some do) use cheaper production methods, such as an LMS instead of video, for content delivery, or using and re-editing video recordings of classroom lectures via lecture capture.

Without learner support or academic assistance, though, delivery costs for MOOCs are zero, and this is where the huge potential for savings exist. If the cost per participant is calculated the MOOC unit costs are very low, combining both production and delivery costs. Even if the cost per student successfully obtaining an end of course certificate is calculated it will be many times lower than the cost of an online or campus-based successful student. If we take a MOOC costing roughly $100,000 to develop, and 5,000 participants complete the end of course certificate, the average cost per successful participant is $20. However, this assumes that the same type of knowledge and skills is being assessed for both a MOOC and for a graduate masters program; usually this not the case.

5.4.8.5 Costs versus outputs

The issue then is whether MOOCs can succeed without the cost of learner support and human assessment, or more likely, whether MOOCs can substantially reduce delivery costs through automation without loss of quality in learner performance. There is no evidence to date though that they can do this in terms of higher order learning skills and ‘deep’ knowledge. To assess this kind of learning requires setting assignments that test such knowledge, and such assessments usually need human marking, which then adds to cost. We also know from prior research from successful online credit programs that active instructor online presence is a critical factor for successful online learning. Thus adequate learner support and assessment remains a major challenge for MOOCs. MOOCs then are a good way to teach certain levels of knowledge but will have major structural problems in teaching other types of knowledge. Unfortunately, it is the type of knowledge most needed in a digital world that MOOCs struggle to teach.

5.4.8.6 MOOC business models and cost-benefits

In terms of sustainable business models, Baker and Passmore (2016) examined several different possible business models to support MOOCs (but do not offer any actual costing). The elite universities have been able to move into xMOOCs because of generous donations from private foundations and use of endowment funds, but these forms of funding are limited for most institutions. Coursera and Udacity have the opportunity to develop successful business models through various means, such as charging MOOC provider institutions for use of their platform, by collecting fees for badges or certificates, through the sale of participant data, through corporate sponsorship, or through direct advertising.

However, particularly for publicly funded universities or colleges, most of these sources of income are not available or permitted, so it is hard to see how they can begin to recover the cost of a substantial investment in MOOCs, even with ‘cannibalising’ MOOC material for or from on-campus use. Every time a MOOC is offered, this takes away resources that could be used for online credit programs. Thus institutions are faced with some hard decisions about where to invest their resources for online learning. The case for putting scarce resources into MOOCs is far from clear, unless some way can be found to give credit for successful MOOC completion.

5.4.9 Summary of strengths and weaknesses

The main points of this analysis of the strengths and weaknesses of MOOCs can be summarised as follows:

5.4.9.1 Strengths

- MOOCs, particularly xMOOCs, deliver high quality content from some of the world’s best universities for free or at little cost to anyone with a computer and an Internet connection;

- MOOCs can be useful for opening access to high quality content, particularly in developing countries, but to do so successfully will require a good deal of adaptation, and substantial investment in local support and partnerships;

- MOOCs are valuable for developing basic conceptual learning, and for creating large online communities of interest or practice;

- MOOCs are an extremely valuable form of lifelong learning and continuing education;

- MOOCs have forced conventional and especially elite institutions to reappraise their strategies towards online and open learning;

- institutions have been able to extend their brand and status by making public their expertise and excellence in certain academic areas;

- MOOCs main value proposition is to eliminate through computer automation and/or peer-to-peer communication the very large variable costs in higher education associated with providing learner support and quality assessment.

5.4.9.2 Weaknesses

- the high registration numbers for MOOCs are misleading; less than half of registrants actively participate, and of these, only a small proportion successfully complete the course; nevertheless, absolute numbers completing are still higher than for conventional courses;

- MOOCs are expensive to develop, and although commercial organisations offering MOOC platforms have opportunities for sustainable business models, it is difficult to see how publicly funded higher education institutions can develop sustainable business models for MOOCs;

- MOOCs tend to attract those with already a high level of education, rather than widen access;

- MOOCs so far have been limited in the ability to develop high level academic learning, or the high level intellectual skills needed in a digital society;

- assessment of the higher levels of learning remains a challenge for MOOCs, to the extent that most MOOC providers will not recognise their own MOOCs for credit;

- MOOC materials may be limited by copyright or time restrictions for re-use as open educational resources.

References

Baker, R. and Passmore, D. (2016) Value and Pricing of MOOCs Education Sciences, 27 May

Balfour, S. P. (2013) Assessing writing in MOOCs: Automated essay scoring and calibrated peer review Research & Practice in Assessment, Vol. 8

Barkley, E, Major, C.H. and Cross, K.P. (2014) Collaborative Learning Techniques San Francisco: Jossey-Bass/Wiley

Bates, A. (1984) Broadcasting in Education: An Evaluation London: Constables (out of print)

Bates, A. and Sangrà, A. (2011) Managing Technology in Higher Education San Francisco: Jossey-Bass/John Wiley and Co

Bayne, S. (2015) Teacherbot: interventions in automated teaching, Teaching in Higher Education, Vol. 20, No.4, pp. 455-467

Colvin, K. et al. (2014) Learning an Introductory Physics MOOC: All Cohorts Learn Equally, Including On-Campus Class, IRRODL, Vol. 15, No. 4

Engle, W. (2104) UBC MOOC Pilot: Design and Delivery Vancouver BC: University of British Columbia

Falchikov, N. and Goldfinch, J. (2000) Student Peer Assessment in Higher Education: A Meta-Analysis Comparing Peer and Teacher Marks Review of Educational Research, Vol. 70, No. 3

Firmin, R. et al. (2014) Case study: using MOOCs for conventional college coursework Distance Education, Vol. 35, No. 2

Harasim, L. (2017) Learning Theory and Online Technologies 2nd edition New York/London: Routledge

Haynie, D. (2014). State Department hosts ‘MOOC Camp’ for online learners. US News, January 20

Hill, P. (2013) Some validation of MOOC student patterns graphic, e-Literate, August 30

Ho, A. et al. (2014) HarvardX and MITx: The First Year of Open Online Courses Fall 2012-Summer 2013 (HarvardX and MITx Working Paper No. 1), January 21

Hollands, F. and Tirthali, D. (2014) MOOCs: Expectations and Reality New York: Columbia University Teachers’ College, Center for Benefit-Cost Studies of Education

Hülsmann, T. (2003) Costs without camouflage: a cost analysis of Oldenburg University’s two graduate certificate programs offered as part of the online Master of Distance Education (MDE): a case study, in Bernath, U. and Rubin, E., (eds.) Reflections on Teaching in an Online Program: A Case Study Oldenburg, Germany: Bibliothecks-und Informationssystem der Carl von Ossietsky Universität Oldenburg [no longer available online]

Jaschik, S. (2013) MOOC Mess, Inside Higher Education, February 4

Knox, J. (2014) Digital culture clash: ‘massive’ education in the e-Learning and Digital Cultures Distance Education, Vol. 35, No. 2

Kop, R. (2011) The Challenges to Connectivist Learning on Open Online Networks: Learning Experiences during a Massive Open Online Course International Review of Research into Open and Distance Learning, Vol. 12, No. 3

Lave, J. and Wenger, E. (1991). Situated Learning: Legitimate Peripheral Participation. Cambridge: Cambridge University Press

Milligan, C., Littlejohn, A. and Margaryan, A. (2013) Patterns of engagement in connectivist MOOCs, Merlot Journal of Online Learning and Teaching, Vol. 9, No. 2

Pickard, L. (2018) Analysis of 450 MOOC-Based Microcredentials Reveals Many Options But Little Consistency, Class Central, July 18

Piech, C., Huang, J., Chen, Z., Do, C., Ng, A., & Koller, D. (2013) Tuned models of peer assessment in MOOCs. Palo Alto, CA: Stanford University

Reich, J. and Ruipérez-Valente (2019) The MOOC Pivot, Science, Vol.363, No. 6423

Rumble, G. (2001) The costs and costing of networked learning, Journal of Asynchronous Learning Networks, Vol. 5, No. 2

Suen, H. (2014) Peer assessment for massive open online courses (MOOCs) International Review of Research into Open and Distance Learning, Vol. 15, No. 3

University of Ottawa (2013) Report of the e-Learning Working Group Ottawa ON: The University of Ottawa

van Zundert, M., Sluijsmans, D., van Merriënboer, J. (2010). Effective peer assessment processes: Research findings and future directions Learning and Instruction, 20, 270-279

Activity 5.4 Assessing the strengths and weaknesses of MOOCs

1. Do you agree that MOOCs are just another form of educational broadcasting? What are your reasons?

2. Is it reasonable to compare the costs of xMOOCs to the costs of online credit courses? Are they competing for the same funds, or are they categorically different in their funding source and goals? If so, how?

3. Could you make the case that cMOOCs are a better value proposition than xMOOCs – or are they again too different to compare?

4. MOOCs are clearly cheaper than either face-to-face or online credit courses if judged on the cost per participant successfully completing a course. Is this a fair comparison, and if not, why not?

5. Do you think institutions should give credit for students successfully completing MOOCs? If so, why, and what are the implications?

I give my own personal views on these questions in the podcast below, but I’d like you to come to your own conclusions before listening to my response, because there are no right or wrong answers here: